For some time we’ve been increasingly interested in providing coverage data from manual testing back into the development cycle of our software. Until now it’s been a bit tedious to teach users and even QA engineers to use the old-style NCover 3 command lines to collect coverage files, and then after collection to pull those individual coverage files together for merging. NCover 4 has removed all that hassle. Testers can simply run their applications and let NCover do the work in the background.

figure 1.

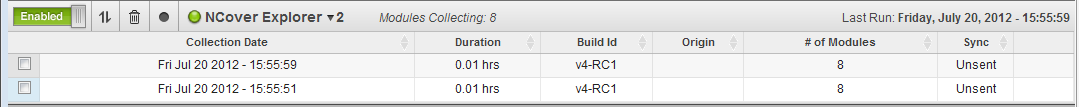

NCover 4 introduced a new client called Collector. This client provides the NCover coverage profiler to a wider audience in a lighter footprint. Used in conjunction with Code Central we can create shared projects which are dispersed to the various testing machines around our organization. Once Collector is installed on testing machines throughout the organization I can control project definitions and collections from a central location. My testers are required to know very little about NCover projects and executions. End-users can still disable local coverage or pause data synchronization (figure 1). They can even delete local coverage data without sending it to a server. In this day of remote resources it’s an essential feature to allow disconnecting from the Code Central server. This can be done by unplugging or by choosing “Pause Data Load” (figure 2). Whenever they plug back in or “Resume Data Upload”, their collected executions are automatically synced.

figure 2.

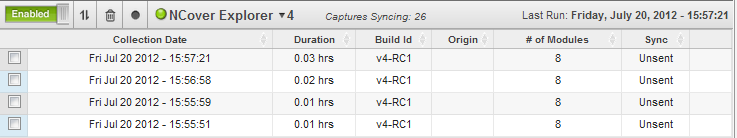

When the user starts the application locally the collection of coverage looks similar to what we normally see in desktop (figure 3). The major departure from what we’ve seen in the past is that the user simply starts his application in any normal fashion and as the Collector service globally monitors the appropriate process starts on his machine collection begins automatically.

figure 3.

I’ve disabled data synchronization in figure 3 to demonstrate that data can be gathered offline and synced to Code Central at a later time. Each time I start my application from a shortcut or the start menu in Windows I get a new execution. In fact I can start multiple copies of my application simultaneously and they are all covered independently. Collector’s interface has limited analysis capability so what the user sees is simply a list of projects and the number of executions he has captured. He has some control over this data as seen from the menu options in figure 2: pause, delete, sync now.

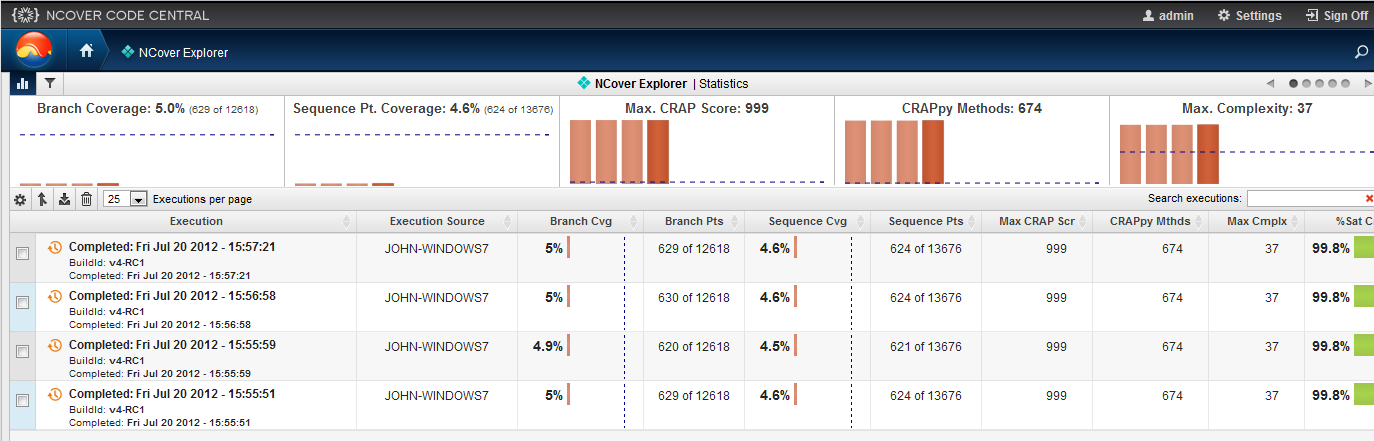

Once I turn on synchronization for my project, the executions stored locally on my testing machine are sent to Code Central (figure 4). Once synced the local copy is purged. If synchronization fails I can mark an execution as unsent and it will be re-sent when connectivity is restored or when I select Sync Now from the project menu.

figure 4.

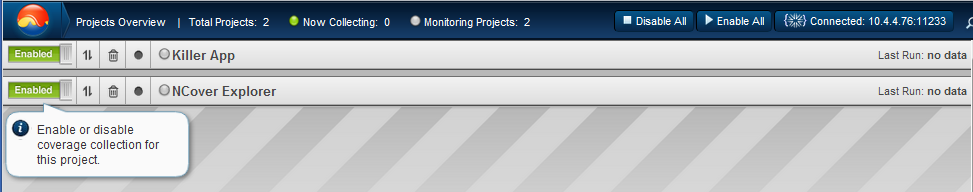

The real gem is that when testers complete the data collection I can manage their executions on Code Central. Code Central reports the user and machine that uploaded the data and this allows me great flexibility in managing the data collection (figure 5). At this point I can rename and merge the executions as needed.

figure 5.

In my example notice too that I have set the Build Id for all executions to v4-RC1. This marker was collected from the individual testing machines and means that coverage for multiple software versions can be easily distinguished in a central location.

It doesn’t take long to realize the power this provided to a project or development manager who has distributed teams. Not to mention the insight to testing work that may be outsourced across the building, or around the world. This tool becomes a great point of leverage and provides tremendous insight to completeness of testing from the manual QA perspective. As a development manager I’m not simply left to trust that the testing was done and I’m not blinded to what is missed. Dove-tailing coverage from manual testing with the coverage more commonly provided by my development team, I am now able to make smarter decisions about how to allocate resources for testing.